No Need To Change Existing Systems,

Service Providers, Or Institutions

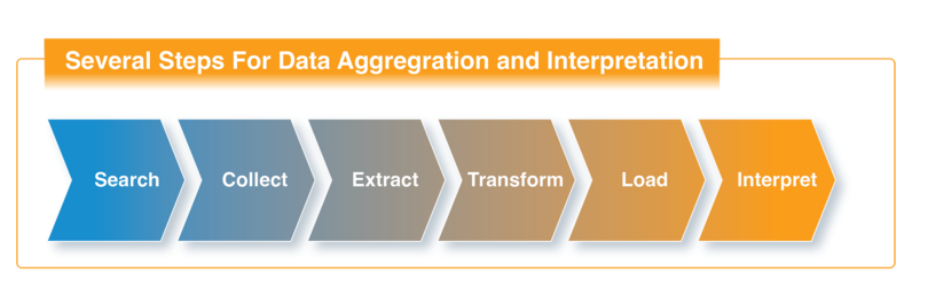

Data Aggregation & Normalization

The First Real-Time, Unified Business Technology Platform

Data Aggregation & Normalization

Turn Your Data Into Information

Turn Your Data Into Information

Actionable Insights

Aggregation is the process of combining several numerical values into a single representative value, a process that is performed by engines called aggregation functions. These functions are deployed when aggregating information is important – e.g., in situations such as applied and pure mathematics (probability, statistics, decision theory, functional equations); operations research; computer science; , and many other applied fields such as economics and finance, pattern recognition, image processing, data fusion, etc.

Normalization is a data transformation process whose objective is to align data values to a common scale (or distribution of values). The reason: by doing so, that information can immediately be generated and presented, no matter what format the data originally comes in. Normalization also entails adjusting the scale of values to a standard metric or adjusting the time scales for the data to comparable periods.

Data normalization is generally considered the development of clean data.

Through data normalization, data is organized to make it similar across all records and fields. This standardization increases the cohesion of various entries, enabling data cleansing and higher-quality data.

Simply put, this process ensures logical data storage eliminating unstructured data and data redundancy (duplicates). When data normalization is performed correctly, the result is standardized information entry. For example, this process could apply to the way in which disparate types of data – such as URLs, contact names, street addresses, phone numbers, and even zip codes – are recorded. These standardized information fields could then be grouped and read more swiftly.